Article originally published in INTERFACE magazine (n°136 – Q4 2019), edited by INSA Lyon alumni. Reproduced here with their kind permission.

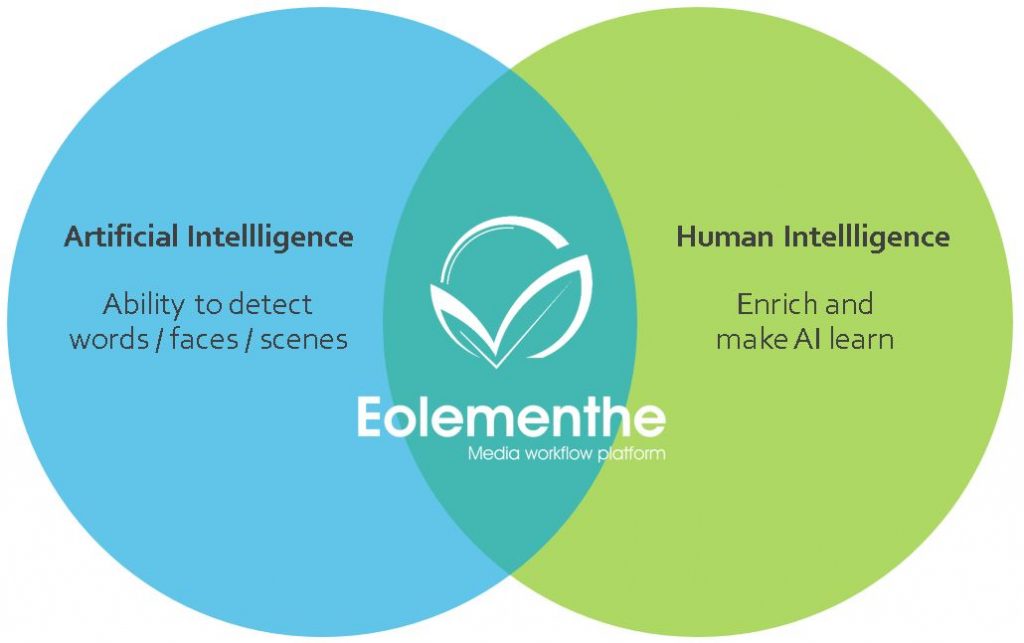

Artificial intelligence – undoubtedly the buzzword for 2019 – is generating plenty of excitement, but also certain fears. The main concern is that it could replace human beings – but what if we looked at AI in a different way? What if human beings could in fact make AI more intelligent and efficient, opening a door to new kinds of job opportunities?

I’ve been working in the professional video industry for 20 years now, and this is a sector undergoing profound change. The arrival of IT in the 2000s, followed by the Cloud in 2010, has changed traditional ways of working in the industry, from a human, technical and commercial perspective.

In fact, companies now need a whole new breed of technical staff to set up the infrastructures required. Those with IT and computer networking backgrounds predominate, and “traditional” technical staff and video operators may feel neglected by their management. The advent of the Cloud has only intensified this perception, as it adds to the mix a feeling of lacking these current skills. The use of online services causes further debate, and division between the different teams.

But this is just the beginning. Artificial Intelligence then bursts onto the scene, creating an additional significant change to be handled. The use of Cloud services places vast computational resources at our fingertips, giving us an enormous data-processing capacity, allowing us to create rules and logic, and carry out sorting operations. AI is set to shake up the human/machine relationship once again, and disrupt the balance.

AI: what are we talking about exactly?

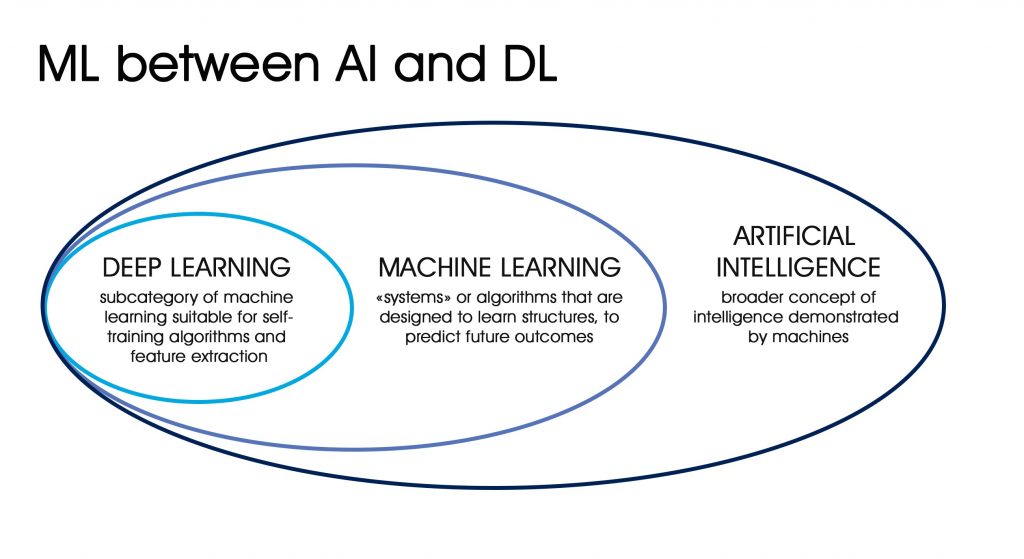

An algorithm alone is not considered as AI, despite what some companies promote. Artificial Intelligence actually includes different technologies: Machine Learning and Deep Learning, among others.

According to Dony Ryanto (source : ‘Machine learning, Deep learning, AI, Big Data, Data Science, Data Analytics’ par Dony Ryanto, Janvier 2019), machine (ML) is a field of artificial intelligence that uses statistical techniques to give computer systems the ability to “learn” (in other words to progressively improve their performance on a specific task) from data, without being explicitly programmed in advance.

Deep Learning (DL) is an autonomous algorithm based on a neural system that can achieve results as good as or even better than human beings. Deep Learning is used in particular for image and voice recognition, automated translation, medical image analysis, social media filters, etc.

Artificial Intelligence for video

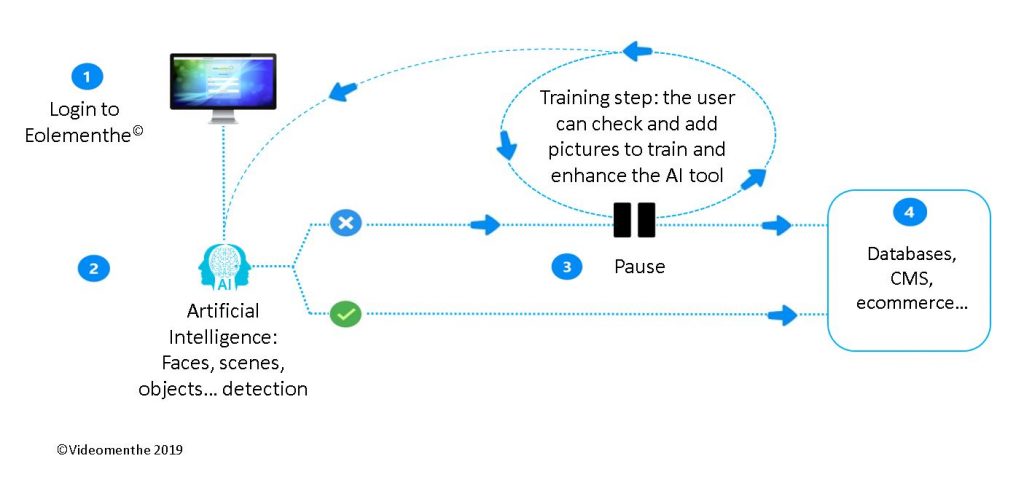

18 months ago, my team began integrating AI as part of the range of tools offered by Eolementhe – our collaborative-working web platform – enabling media, marketing and HR professionals to process and deliver videos with ease.

According to Gartner (source : ‘A Framework for Applying AI in the Entreprise”, June 2017):

“In general, AI is leveraged into digital businesses in one of six key ways: (1) dealing with complexity, (2) making probabilistic predictions, (3) learning, (4) acting autonomously, (5) appearing to understand and (6) reflecting a well-scoped or well-defined purpose.”

In the field of video, we can easily identify several areas to which Machine Learning can be applied: prediction, to save time in detecting objects, places and people, and also transcription.

Here are a few examples:

Indexing: document databases & archives

Central archives, media libraries and the multimedia resource centers of training organizations, big corporations and institutions, etc., handle and store a large number of videos, which will be repurposed to create fresh content on a particular topic. Then it’s a matter of indexing this content appropriately using key words and images.

Some examples of this are: identifying and listing all public figures (politicians, sports personalities, actors, etc.) who appear in a video, or identifying locations (such as town, beach, factory, station, etc.) or objects (cars, bicycles, etc.), making it easier to carry out searches for material to illustrate a specific subject (such as a train drivers’ strike, for example). AI permits an automatic selection of appropriate material to be made (for instance, using facial recognition) to facilitate content repurposing. Forget about expensive, time-consuming, error-prone manual indexing: human staff can now focus on higher-added-value tasks.

Selection of content before delivery or broadcast

Another example: delivery and broadcasting of content on TV channels, web sites, social media, etc., with a preliminary selection made by Artificial Intelligence on video, based on the broadcaster’s predefined criteria. For instance, by picking out locations, faces, words, etc., in accordance with the requirements of special-interest channels or those with a young audience, or restrictions applied in some countries (in relation to nudity, alcohol, etc.).

Transcription and translation for subtitling

Another prospective area for action is getting the Artificial Intelligence to recognize certain terms in a video (terminology specific to a business sector, prohibited/restricted words, brands, etc.) so that this creates a self-learning AI. The goal is to offer a very relevant, efficient transcription service and then generate high-quality multilingual subtitles. Words included in the subtitles can also be used as tags to facilitate media indexing.

The SEO, or how to improve the search engine

Similarly, metadata extracted by the Artificial Intelligence (such as title, keywords, people featured, transcription, etc.) can improve videos’ organic ranking on search engines and increase their visibility.

Create a supplementary learning loop to benefit from the best of both worlds

One of the paradoxes of Artificial Intelligence is that it needs us in order to learn. Forget about the fantasy of the all-powerful, self-aware AI, able to replace human beings in every way. Without the ability to learn, an AI tool will be limited in scope.

Several multinational corporations are currently working on Artificial Intelligence for video: Google, Microsoft IBM, but also all of the software publishers specializing in a particular area (transcription, etc.).

In the B2B market, software publishers train their AI tool in-house. There is no process of shared learning for users, in order to limit the risk of errors in the data. The data is key and it’s essential for it to be controlled. (You know the expression “garbage in = garbage out”?)

On the other hand, some software publishers supply “empty” software. Then it’s up to you to train it according to your own needs. New technologies are furthermore emerging that will enable companies to develop their own learning models, without the (scarce) skills of experts or data scientists. One of these new trends is AutoML, which makes it easy to create machine-learning models.

At Videomenthe, even prior to incorporating AI, we chose to combine automated Cloud services with the option of manual intervention, to provide our clients with a fast, high-quality result.

Conclusion

We need to demystify Artificial Intelligence. When used in a relevant, ethical, well-thought-out way, AI can give users more time and eliminate tedious and cumbersome tasks. This is typically the case in the field of video, where human expertise is essential in adding value to content.

“Ten percent of firms leveraging AI will bring human expertise back into the loop” Forrester Predictions 2019

Future developments will naturally feature AI, because it will no longer be a buzzword, but an accepted fact of life.

MURIEL LE BELLAC, Videomenthe CEO